– Anyone can enjoy creating lyrics animation that matches their style with “TextAlive” –

Masataka Goto (Prime Senior Researcher) of the Information Technology Research Institute (ITRI; Director: Yoshio Tanaka), the National Institute of Advanced Industrial Science and Technology (AIST; President: Ryoji Chubachi), Jun Kato (Researcher) and Tomoyasu Nakano (Senior Researcher) of the Media Interaction Group, ITRI, AIST, have developed the lyrics animation production support service “TextAlive” (http://textalive.jp) which enables users to animate lyrics in time with music, using song and lyrics contents published on the web. It will be opened to the public and demonstration experiments will begin on September 8, 2015.

Due to the spread of music and video sharing services, many videos matched to music have been published. Lyrics animations in particular can express the lyrics of music attractively, but production requires enormous effort, from installing and learning how to use video production tools to adjusting the timing of character movement.

TextAlive is a service fusing AIST’s original music-understanding technology and newly developed programming environment technology, with which users can easily produce and share lyrics animations. By choosing a song and specifying the video’s style, using the information about vocal timing, chorus sections, etc., analyzed in advance with the music-understanding technology, users can quickly produce lyrics animations with various effects. In addition, users can choose “templates” of visual effects for each word or character with an intuitive interface to express intended effects. Furthermore, users can program templates and their parameter tuning interfaces, then share the templates with other users on TextAlive. Using TextAlive, thus, allows people with diverse background to demonstrate their creativity and enjoy lyrics animation production without an enormous effort.

This research was conducted as part of a research project (Research Director: Masataka Goto) of the JST Strategic Basic Research Programs Team Research (CREST) of the Japan Science and Technology Agency (JST).

|

|

“TextAlive” that enables users to produce and share lyrics animation easily |

With the spread of music and video sharing services, the number of users who enjoy publishing music or videos matched with music is increasing. In particular, lyrics animations in which lyrics move in time to music are a method for attractively expressing lyrics in the form of a video. Yet, in order to produce lyrics animations, users must first install video production tools on their personal computer and learn how to use them. They must also make considerable effort to specify the movement of each character to music each time they produce a new animation. Currently, the technological threshold is very high for people who have never produced a lyrics animation to enjoy producing a new animation in time with their favorite music.

AIST has worked on the active music listening assistance which allows users to enjoy a deeper comprehension of music, based on the music-understanding technology. So far, AIST has developed “Songle,” an active music listening service which visualizes the contents of online music (http://songle.jp) and “Songrium,” a music-browsing assistance service which visualizes the mutual relationship between songs (http://songrium.jp), and launched them to the general public (AIST/JST joint press releases on August 29, 2012 and August 27, 2013).

Through the research and development of these services, the researchers have come to understand the high degree of interest people have in getting actively involved with music-related contents. Therefore, they focused on lyrics animations as a means of expression to visually convey lyrics, which are an important component of music. They began development of a system in which users can create, edit, and share lyrics animations online, test launched a beta version for researchers in November, 2014. Now that the researchers have completed features which vastly improve usability in web browsers and allow users to choose a lyrics animation style and change the arrangement of the entire video instantly, as well as a web API that enables embedded character animations on external websites, they have decided to launch the service to the general public for demonstration testing.

This research was conducted as part of the “Building a Similarity-aware Information Environment for a Content-Symbiotic Society (Research Director: Masataka Goto)” research project in the JST CREST “Creation of Human-Harmonized Information Technology for Convivial Society” research area.

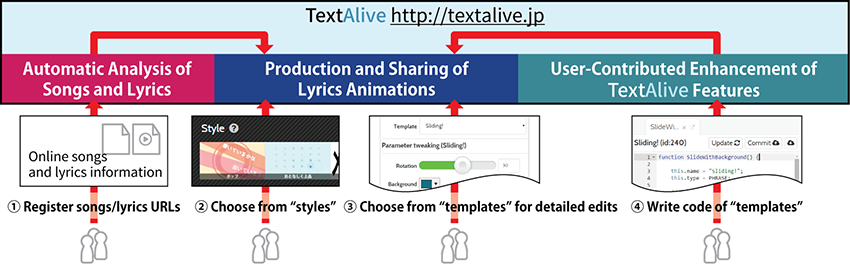

The researchers developed a system for creating, editing, and sharing lyrics animations using online music and lyrics contents, and will open it to the public as the lyrics animation production support service “TextAlive” (http://textalive.jp). TextAlive operates by the following mechanisms (Fig. 1).

-

When a user registers the URLs of any music (acoustic signal files in MP3 format or music and video on music and video sharing services) and lyrics, TextAlive analyzes the content of the music and the correspondence between music and lyrics.

-

When a user selects music registered to TextAlive, the music on the original website is streamed, and lyrics animation is rendered in synchrony, so the music and its lyrics can be enjoyed visually and acoustically. Users can choose arrangements for the entire video from various “styles” and change instantly, easily producing lyrics animations which they prefer.

-

Users can choose detailed visual effects from “templates” for each phrase, word, and character, arrange them as they like, and share lyrics animations online. Other users can add further edits to produce derivative works of shared lyrics animations.

-

Users who have programming skills can edit and produce new “templates” and “parameter tuning interfaces for templates”. Because these are shared on TextAlive, this enhances the expressivity of all users.

|

|

Figure 1: Overview of the TextAlive system |

TextAlive has the three following characteristics:

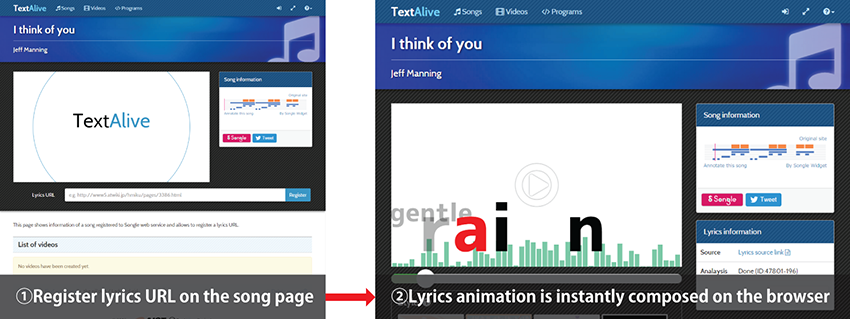

1. Enables easy production of lyrics animations based on online music and lyrics information

Users can view lyrics animations that move in time with music by entering the URL of webpages with music and lyrics posted on them into TextAlive. The lyrics animation is synthesized automatically in the user’s browser, based on lyric utterance timing information analyzed from the music and lyrics by AIST’s original music-understanding technology (Fig. 2).

Users can choose a style on the browser to instantly change effects of the entire video, and produce lyrics animations to their preference. They can also choose effects from templates and adjust them for each phrase, word, and character, to perform even more detailed effects. This is achieved with programming environment technology newly developed by AIST, which can execute and update programs that synthesize lyrics animations from lyric utterance timing (styles), and programs that control the shape changes and movement of characters (templates) in the browser.

Thus, by using analysis results of the music-understanding technology in the programming environment technology, even users who have never produced a lyrics animation can enjoy producing lyrics animations timed to music with effects of their preference.

|

|

Figure 2: Procedure to create lyrics animations |

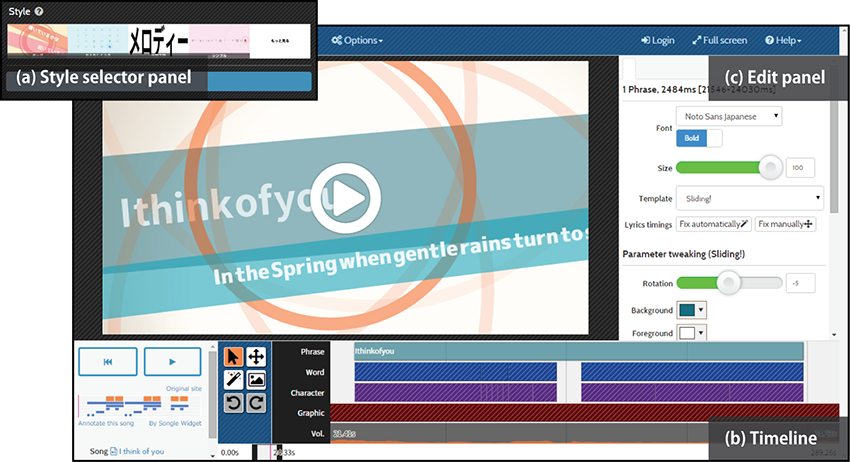

2. Supports people’s creativity with intuitive interfaces and mechanisms that simplify production of derivative work

TextAlive provides three editing interfaces with which users can easily produce lyrics animations. Thus, even users who do not have video production experience can produce lyrics animations to their preference. Because users can edit using lyrics animations produced automatically based on the chosen style, manual effort is greatly reduced even for users with experience in production (Fig. 3).

(a) Style selector panel: Using the simple interface for choosing styles, users can change effects for the entire lyrics animation instantly, and produce lyrics animations to their preference.

(b) Timeline: Users can easily search for lyrics in any part they want to see by moving the timeline cursor left and right with the mouse. In addition, automatically analyzed lyric utterance timing is displayed in colored sections by phrase, word, and character, so that by stretching sections and moving sections left and right, users can edit lyric utterance timing for any group of characters or single character, and correct errors in automatic analysis results.

(c) Edit panel: This panel is displayed when the user clicks the edit button while viewing a lyrics animation. Users can choose the font, size, motion and transformation of phrases, words, and characters chosen on the timeline from a lot of templates. Visual effects of templates can be easily customized with the intuitive parameter tuning interface, such as sliders.

In general video sharing services, videos are displayed by rapidly changing multiple still images prepared in advance. Because of this, it is difficult to change the content of videos after production. On the other hand, in TextAlive, programs such as styles and templates are executed in real time in the browser by programming environment technology to display lyrics animations. Therefore, it is easy to apply effects for existing videos to videos of other music, or edit just part of a video, underpinning with technology a culture in which various users can create many derivative works from a single original work.

|

Figure 3: Example of editing interface screen

Because lyrics animations are saved in the TextAlive server, edited animations and programs can be shared with other users. Users not only can view lyrics animations, but can also enjoy producing derivative works to their preference. |

3. Enables enhancement of effects and editing features by web-based programming

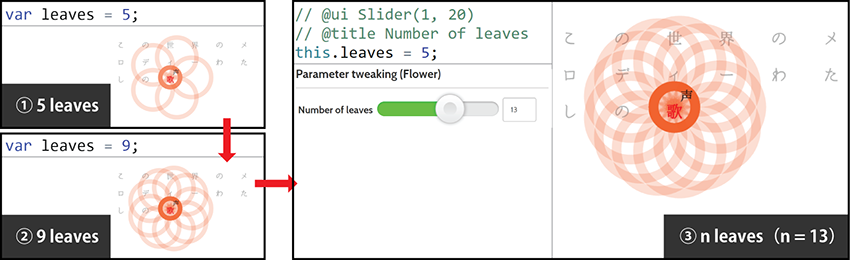

In TextAlive, when users are not satisfied with the templates prepared in advance, they can program their own new templates. The service realizes a new type of live programming, in which users can revise algorithms that determine the movement of characters while still playing a lyrics animation, without closing the editing interface. Some features of TextAlive have been launched as a web API that can be embedded in other webpages, so programmers can entertain visitors to webpages which they have produced themselves with lyrics and character animations displayed.

Two methods are provided as tools for users to perform programming.

(a) Programming panel: By using the programming panel in the editing panel, which is one of the TextAlive interfaces, users can display and edit a program prepared as a lyrics animation template. Edited program is executed immediately, and the lyrics animation is updated. The results are visible immediately, making the interface more acceptable for users who are new to the study of programming. Additionally, by simply adding comments to a program with a simple notation method, users can add interfaces such as sliders, which can customize the visual effect of a template, to the editing panel (Fig. 4). Even users who do not program can make effects as they intend to using this interface.

(b) Web API: Utilizing the web API provided by TextAlive, users can display lyrics animations on webpages, display moving contents synchronized with lyrics animations, or even animate and display text content not limited to the lyrics.

|

Figure 4: Example of using the programming panel

Changing the default value of the leaf number of the template program from 5 to 9 changes the number of red circles displayed. The final screen shown has a slider for adjusting the number of leaves added to the editing panel. Thus, by programmers preparing interfaces, even users who do not program can set the desired number of leaves, and reflect their intended effect. |

The researchers plan continuous research, development, and operation of “TextAlive,” the lyrics animation production support service that anyone can use from their web browser. The newly developed technology can automatically produce content based on information acquired by automatically analyzing the content of music with music-understanding technology, and the programming environment technology provides an interface that can smoothly traverse viewing and editing of content. The researchers plan to expand these technologies to a variety of applications in cooperation with industry, including music distribution services, production support for content not limited to music, and programming education.